Apple is reportedly planning to launch AI-powered glasses, a pendant, and AirPods

Source: The Verge

Apple is reportedly aiming to start production of its smart glasses in December, ahead of a 2027 launch. The new device will compete directly with Meta’s lineup of smart glasses and is rumored to feature speakers, microphones, and a high-resolution camera for taking photos and videos, in addition to another lens designed to enable AI-powered features.

The glasses won’t have a built-in display, but they will allow users to make phone calls, interact with Siri, play music, and “take actions based on surroundings,” such as asking about the ingredients in a meal, according to Bloomberg. Apple’s smart glasses could also help users identify what they’re seeing, reference landmarks when offering directions, and remind wearers to complete a task in specific situations, Bloomberg reports.

-snip-

Apple’s plans for AI hardware don’t end there, as the company is expected to build upon its Google Gemini-powered Siri upgrade with an AirTag-sized AI pendant that people can either wear as a necklace or a pin. This device would “essentially serve as an always-on camera” for the iPhone and has a microphone for prompting Siri, Bloomberg reports. The pendant, which The Information first reported on last month, is rumored to come with a built-in chip, but will mainly rely on the iPhone’s processing power. The device could arrive as early as next year, according to Bloomberg.

Apple could also launch upgraded AirPods this year, which Gurman previously said could pair low-resolution cameras with AI to analyze a wearer’s surroundings.

Read more: https://www.theverge.com/tech/880293/apple-ai-hardware-smart-glasses-pin-airpods

The Trump regime must love the AI bros' quest to create a society where everyone has themselves and everyone around them under surveillance at all times.

They just need lots of data centers to handle the non-stop data gathering by all the dumb Americans tech lords can convince to use the devices.

The pendant with the always-on camera and microphone sounds even more ideal for surveillance than a camera in an eyeglass frame that might have to be turned on and would light up to show you're recording everyone and everything around you.

And if you think the Trump regime won't demand that data...

FalloutShelter

(14,344 posts)Multichromatic

(87 posts)Why should we help corporations set up an unconstitutional surveillance state?

Polybius

(21,689 posts)Pretty cool. You can listen yo music, make calls, take pics, etc. If you say "Hey Meta, what am I looking at?", it will tell you what it sees in detail.

Not sure why so many hate this tech, but it's most likely a generation thing.

highplainsdem

(61,087 posts)recording video and/or audio with them, or using them with a facial recognition app to find out who they are and maybe pretend to have met them before. Or try to find out where they live, even before they've given you their name.

They're essentially surveillance devices. What they pick up will be saved by Meta and will become available to the government, if the authorities want it.

People are getting rid of their Ring devices, too.

I wouldn't trust anyone wearing smart glasses.

I saw video on YouTube from someone recording an Oasis concert while wearing smart glasses, and at no point did she tell the people she was talking to that she was recording them from a couple of feet away.

At least if someone is using a smartphone for recording, it's usually pretty obvious.

Polybius

(21,689 posts)When recording or looking, it omits a bright light, letting anyone know that it is being used. Also, Ray-Ban Meta glasses do not have built-in facial recognition technology that identifies people.

I've have Ring too. Why are people getting rid of it? What's it gonna see, me leaving for work? Maybe getting the mail? Just wear pajamas and it won't be an issue. ![]()

highplainsdem

(61,087 posts)And even without it being built in, there are facial recognition apps that anyone wearing smart glasses can use.

People don't like being surveilled and recorded by just anyone they meet, anywhere.

And people who want to record others without them knowing are creeps most people wouldn't want to know.

Editing to add that in the current political climate, no one should trust a stranger wearing smart glasses.

Polybius

(21,689 posts)From your link:

Also, there are no outside apps that are official that you can download, at least without rooting your device.

No comment about the the light that goes off that alerts others that it's recording? I guess you missed it.

highplainsdem

(61,087 posts)they've already tested smart glasses recording strangers and discovered people don't notice the light.

No AI user deserves wearable AI at the expense of the privacy of everyone they meet.

They're a violation of other people's rights.

It's against the law in most states to record a phone conversation without letting the person you're talking to know you're recording.

If anyone has a legitimate reason to need smart glasses, they should be made glaringly obvious to everyone around.

Polybius

(21,689 posts)Meta intentionally built visible safeguards into the design. The recording LED isn’t optional — you cannot simply cover it with your hand or tape and keep recording. If you try to block it, the glasses won’t record. The only theoretical workaround would involve physically damaging the glasses, like drilling into them to remove the LED, which is complicated, risky, and could almost certainly destroy a very expensive product. That’s not something the average person is going to do.

And as for the claim that “people won’t notice the light” — it’s extremely bright and obvious when recording. If someone truly wouldn’t notice that LED, they probably wouldn’t notice someone discreetly taking a photo with a smartphone either. Smartphones are far more common, easy to conceal, and far less regulated in how they signal recording.

This isn’t the first time new camera technology has sparked privacy panic. When camera phones first became mainstream in the early 2000s, many people insisted they were unnecessary and invasive. There were serious arguments from old-timers that such as “no one needs a camera on them at all times” and that only creeps would want that capability. Fast forward over twenty years, and smartphones with cameras are completely normalized. Society adapted, etiquette evolved, and life went on.

As for practical uses, there are plenty — and most are completely ordinary. The glasses are incredibly convenient for hands-free calls and music while walking. The voice assistant features are genuinely useful — being able to say, “Hey Meta, what am I looking at?” and get real-time context is impressive, helpful, and not to mention fun. And sometimes you just need to capture a quick moment without fumbling for your phone — like a deer crossing your path or something happening in real time that would be gone by the time you unlock your screen.

Like any technology, the glasses can be misused — but so can a phone, a laptop, or practically any recording device. The key difference here is that Meta clearly anticipated privacy concerns and built visible, enforceable safeguards directly into the hardware. That’s not negligence — that’s responsible design.

Technology evolves. The question isn’t whether it exists — it’s whether it’s built with thoughtful protections. In this case, it clearly is.

highplainsdem

(61,087 posts)or would you wonder if they're a pedo trying to record the kids surreptitiously - and since they like AI, maybe using whatever they record for deepfake porn?

Someone wearing smart glasses does not deserve the benefit of the doubt. No one doing surreptitious surveillance does.

Smart glasses should be banned, and people using them should be shunned or banned from places where people don't want to be surveilled/recorded. Think of creeps wearing smart glasses in men's rooms, for instance. Or in dressing rooms used by multiple people.

Polybius

(21,689 posts)If a relative or close friend that I trusted was watching my kids or nephew and happened to be wearing Meta's, that fine with me. They have their cell phones on them too.

Banned? That's an insane argument to make. This isn't North Korea. Dressing rooms? Sure, that would bother me. But a creep can sneak a cell pic in there too. Or, worse yet, have a dedicated spy device (which are legal to own, btw). Those are just the risks we have to take in a free society. Freedom comes comes with the good and bad.

highplainsdem

(61,087 posts)video and audio at any time. Google Glass smart glasses were discontinued because people objected to them. (See the link in reply 11.)

It's legal to own a dedicated spy device, but illegal to put them in bathrooms or changing rooms or other areas where people expect privacy. And creeps taking photos there will get in trouble, too.

You want freedom for smart glass users, and everyone else to lose their rights not to be recorded/surveilled.

Neither you nor anyone else deserves that freedom.

They're already having to ban smart glasses for SATs because they can't trust the AI users wearing them not to cheat.

If you think people shouldn't mind being recorded, I suggest you try walking around in public for a while, holding up your smart phone everywhere you face, telling everyone you look at that you're recording them.

You're not likely to find very many people happy about that. Some might try to drive you away, some might call the police, and if you try this in any business you'll probably be asked to leave.

You want the "freedom" to do that surreptitiously with smart glasses.

That's creepy.

Polybius

(21,689 posts)I think you’re framing this as a zero-sum issue — that giving someone the ability to use smart glasses automatically strips everyone else of their rights. That’s not how this works.

First, in most public spaces in the United States, there is no legal expectation of privacy. That standard already applies to smartphones, dashcams, security cameras, GoPros, and even doorbell cameras. The Ray-Ban Meta Smart Glasses don’t create a new legal reality — they exist within the same one that’s been in place for decades.

Second, the idea that this is about “surreptitious” recording ignores the built-in safeguards. The glasses have a mandatory recording LED that cannot simply be covered to keep filming — the device literally won’t record if you block it. That’s more transparency than most phones provide. With a phone, someone can appear to be texting while recording. With these glasses, there’s a visible signal by design.

On the SAT example — schools banning devices during exams isn’t new or unique to smart glasses. Phones, smartwatches, calculators, even certain headphones have all been restricted in testing environments. That’s not evidence that the technology itself is unethical; it’s just normal policy adapting to new tools. We don’t ban smartphones from society because they’re banned in testing centers.

Your suggestion about walking around openly holding up a phone and announcing you’re recording isn’t really comparable. Social norms matter. If someone walks around aggressively filming people at close range, they’ll make others uncomfortable whether they’re using a phone, a DSLR, or anything else. That’s a behavior issue, not a hardware issue. The same social expectations would apply to someone misusing smart glasses.

The reality is that most people using them are doing very ordinary things — hands-free calls, music, quick photos of things happening in real time, or using AI features for accessibility and convenience. The overwhelming majority of users aren’t trying to secretly surveil strangers.

Every new recording technology has triggered fear at first. Camera phones did. GoPros did. Even early portable camcorders did. Society adjusted, etiquette developed, and life continued.

You may personally find the concept uncomfortable — that’s fair. But discomfort doesn’t automatically equal a loss of rights. The legal framework hasn’t changed. The social norms haven’t collapsed. And the device was intentionally designed with visible safeguards to address exactly the concerns you’re raising.

Calling it “creepy” assumes malicious intent. Most of the time, it’s just another evolution of the camera that’s already been in everyone’s pocket for 20 years.

As for creeps getting in trouble, good. Lock up anyone who takes pics in bathrooms or changing rooms.

This is clearly a generational issue, and we're unlikely to ever see eye to eye on this.

highplainsdem

(61,087 posts)prices typically come down quickly. Privacy concerns don't go away.

Sigh. Just google

can smart glasses led light be disabled

and you'll quickly find lots of results on disabling that light, such as this one:

https://www.404media.co/how-to-disable-meta-rayban-led-light/

See the article with video below, which I saw mentioned on social media by people commenting that those being recorded didn't notice the LED light or know what meant.

https://euroweeklynews.com/2025/12/06/ai-glasses-spark-rip-privacy-alarm-in-the-netherlands-a-new-era-of-recognition/

See this Reddit thread

https://www.reddit.com/r/RaybanMeta/comments/1n80c1o/is_the_led_light_super_noticeable/

and the comments in the replies about people being recorded not noticing the LED light at all (especially in bright light, like on a sunny day), and people using these surveillance devices buying stickers to block the LED lights without shutting off the camera, or just drilling out the light while keeping the camera functioning.

Your saying you woudn't record surreptitiously does nothing to assure anyone that others using them won't use them that way.

You're also ignoring the fact that AI companies are data-gathering, using pretty much everything collected for training their AI, and they WILL share that data with authorities including the Trump regime.

So even if you think you're recording just for yourself, you have no way of assuring anyone around you that the Trump regime won't end up with everything your smart glasses capture.

Someone wearing smart glasses and recording at a protest is a threat to everyone at the protest. Someone using a smart phone just to openly record ICE agents is much less likely to end up providing closeup shots of other protesters to federal authorities, or conversations that ICE might think make them a threat to ICE officers who aren't breaking the law, or to Trump. Do you really want to record some angry remark about Trump that could get someone reported to the Secret Service and prosecuted, when they hadn't been serious about what they said and retracted it a second later?

Even if you aren't using facial recognition software, you can't stop others from using that software on what you record.

You're effectively mobile surveillance for the AI bros and government, every time you use smart glasses to record.

And there WILL be creeps using this software to record because they're perverts or hope to record something for blackmail or just to embarrass others.

Your use of AI, all by itself, counts against you being as trustworthy as you would be otherwise, since AI is so often used for fraud of different types, from students cheating, to workers pretending to have done work they had AI do instead, to scams by professional criminals.

Wanting to use AI without others seeing you use a smartphone, and record without an obvious camera including a smartphone camera, does suggest possible deceit even more, and not just convenience for you.

And this could end up making people wearing ordinary glasses appear suspicious at first, which would be really unfair to them.

Polybius

(21,689 posts)There’s a lot there, so I’m going to respond point-by-point rather than brushing it off.

1. “Prices come down. Privacy concerns don’t.”

True — privacy concerns don’t disappear. But they also aren’t static. They get addressed through design changes, policy, norms, and law. That’s exactly what happened with smartphones, dashcams, Ring doorbells, and body cams. None of those eliminated privacy concerns — they forced clearer rules and expectations.

The Ray-Ban Meta Smart Glasses are operating inside an already-established legal framework about recording in public. They didn’t create that framework.

2. “You can disable the LED.”

Yes, I’m aware that people online experiment with hardware modifications. But that’s not the same as normal use.

If someone:

Drills into a $300–$400 device

Risks breaking it

Voids the warranty

Potentially damages internal components

That’s intentional tampering.

You can also:

Jailbreak phones

Disable shutter sounds

Install hidden camera apps

Modify drones

The existence of modding communities doesn’t mean the default product is designed for secrecy. It means determined people can modify hardware — which is true of almost any device with a camera.

If someone is willing to physically alter hardware to secretly record others, they were already willing to violate norms. The glasses didn’t create that intent.

3. “People don’t notice the LED.”

In bright sunlight, yes — visibility of any small light is reduced. The same is true of:

A phone screen angled downward

A smartwatch recording

A GoPro clipped to clothing

No indicator system is perfect in every lighting condition. The relevant question is: Did the manufacturer attempt visible disclosure? In this case, yes.

And again — a smartphone can record far more discreetly than someone turning their head directly at you with glasses that visibly light up.

4. “AI companies gather data and share with authorities.”

This is where the argument shifts from device ethics to broader distrust of tech companies and government. That’s a separate — and legitimate — policy debate.

But it applies equally to:

iPhones

Android phones

Social media uploads

Cloud backups

Email providers

If someone records a protest on a smartphone and uploads it to Instagram, that footage is also on corporate servers and accessible via lawful process. That risk isn’t unique to smart glasses.

And importantly: users control whether media is uploaded or kept local. Not everyone is live-streaming everything to AI systems.

If the concern is mass surveillance or government overreach, that’s about data governance laws, not about whether a camera is mounted on your face or in your hand.

5. “Someone recording at a protest is a threat.”

Anyone recording at a protest — with any device — creates that same dynamic. Phones already capture high-resolution, zoomed, stabilized video with far greater detail than smart glasses.

In fact, someone openly holding a phone above a crowd often captures more faces than someone wearing glasses casually looking around.

Again, the risk you’re describing is tied to recording in general — not uniquely to this product category.

6. “Creeps will use it.”

Creeps already:

Use phones

Hide cameras

Install spy devices

Misuse AirTags

Abuse drones

We don’t ban all smartphones because some people take upskirt photos. We criminalize the behavior.

Technology doesn’t eliminate bad actors. It sets default guardrails and relies on laws for enforcement.

7. “Using AI counts against your trustworthiness.”

That’s a broad generalization.

AI is used for:

Accessibility tools

Navigation assistance

Language translation

Image recognition for the visually impaired

Productivity support

Saying “using AI makes you less trustworthy” is like saying “using a calculator makes you dishonest” because some students cheat.

Intent matters. Context matters.

8. “Wearing glasses will make everyone suspicious.”

We already went through this phase with:

Bluetooth headsets

AirPods

Early smartwatches

Body cameras

At first, people reacted strongly. Over time, norms adjusted. Most people now assume someone wearing AirPods is listening to music — not secretly recording.

If smart glasses ever become widespread, visible indicators and cultural familiarity will normalize their presence the same way smartphones did.

The core disagreement

You’re arguing from a worst-case lens:

What if someone disables safeguards?

What if data is misused?

What if the government abuses it?

What if a creep exploits it?

Those are valid concerns — but they apply to nearly all modern recording technology.

I’m arguing from a proportionality lens:

The legal environment hasn’t changed.

The default hardware includes visible disclosure.

The vast majority of use cases are mundane.

Bad actors already have more powerful tools in their pockets.

If the issue is broader AI data practices or government overreach, that’s a serious civic discussion. But that’s not unique to these glasses.

The device itself doesn’t automatically convert someone into “mobile surveillance for AI bros.” It’s a camera — in a different form factor — operating under the same laws, norms, and risks that already exist.

We can debate regulation and corporate data policy. But treating the hardware category itself as inherently sinister assumes malicious intent by default, and that’s a much bigger claim than “this technology has tradeoffs.”

highplainsdem

(61,087 posts)You're trivializing how much easier smart glasses make spying.

You're exaggerating legitimate uses for AI, especially needing to access an AI model when you're just walking around.

Your wearing smart glasses is still a good reason for others to be suspicious of you.

At least some CBP agents now wear Meta's smart glasses:

https://www.404media.co/a-cbp-agent-wore-meta-smart-glasses-to-an-immigration-raid-in-los-angeles/

“It’s clear that whatever imaginary boundary there was between consumer surveillance tech and government surveillance tech is now completely erased,” Chris Gilliard, co-director of The Critical Internet Studies Institute and author of the forthcoming book Luxury Surveillance, told 404 Media.

“The fact is when you bring powerful new surveillance capabilities into the marketplace, they can be used for a range of purposes including abusive ones. And that needs to be thought through before you bring things like that into the marketplace,” the ACLU’s Stanley said.

-snip-

Update: After this article was published, the independent journalist Mel Buer (who runs the site Words About Work) reposted images she took at a July 7 immigration enforcement raid at MacArthur Park in Los Angeles. In Buer's footage and photos, two additional CBP agents can be seen wearing Meta smart glasses in the back of a truck; a third is holding a camera pointed out of the back of the truck. Buer gave 404 Media permission to republish the photos; you can find her work here.

Polybius

(21,689 posts)Big platforms absolutely want data, and they’ve earned skepticism over the years. I’m not arguing that Meta — or any AI company — deserves blind trust.

What I am pushing back on is the idea that smart glasses uniquely transform ordinary people into surveillance agents in a way smartphones, body cams, dashcams, and social media already haven’t.

1. “You’re trivializing how much easier smart glasses make spying.”

They change the form factor. They don’t change the underlying capability.

A modern smartphone:

Has a higher-resolution camera

Has optical zoom

Has stabilization

Can live-stream instantly

Can upload automatically to cloud storage

If someone wants to secretly record people, a phone is already a far more powerful tool. Smart glasses are actually more limited in angle, battery, and control. They’re not some quantum leap in surveillance — they’re a hands-free camera.

The difference is subtlety of posture, not power of capture. And subtle recording has existed for years via phones held low, chest-mounted cameras, button cams, etc.

2. “You’re exaggerating legitimate uses for AI while walking around.”

Not really. For some people, especially those with visual impairments, AI description features are genuinely useful. Even for fully sighted people, real-time translation, object recognition, or contextual info can be practical.

Is it essential for survival? No.

But neither is:

AirPods

Smartwatches

Voice assistants

Fitness trackers

Convenience tech doesn’t need to be life-or-death to be legitimate.

3. “Your wearing smart glasses is still a good reason to be suspicious.”

Suspicion isn’t a rights framework — it’s a social reaction.

People were suspicious of:

Early Bluetooth earpieces

Google Glass users

People filming with GoPros

People flying drones

Over time, norms settle. Suspicion doesn’t automatically equal wrongdoing. If someone behaves normally, most of that suspicion fades in context.

4. Law enforcement using them

The 404 Media article you cited is important. If U.S. Customs and Border Protection agents are wearing Ray-Ban Meta Smart Glasses during immigration raids, that absolutely raises civil liberties questions.

But notice something critical:

That concern is about government use, not civilian ownership.

Law enforcement already uses:

Body cameras

Facial recognition databases

Dron

Stingrays

License plate readers

If agencies adopt a consumer product, that’s a policy and oversight issue. It doesn’t logically follow that ordinary citizens shouldn’t own the device.

Otherwise, by that reasoning, once police started using smartphones, civilians should’ve stopped carrying them too.

5. “Meta is guiding privacy norms because it’s early.”

That’s fair — early-stage tech often has company-driven norms before regulation catches up.

But that’s not permanent. Smartphones were once dominated by a few players shaping norms. Now privacy law, court rulings, and public pressure heavily influence what companies can and cannot do.

If smart glasses become widespread, they will fall under:

State privacy laws

Federal wiretap laws

Biometric data laws (in some states)

Civil liability

Meta doesn’t get to operate outside the legal system just because the form factor is new.

6. The protest scenario

You’re worried about:

Facial recognition

Protester identification

Government abuse

Someone saying something angry on camera

Those are serious concerns — but again, smartphones already enable all of that at scale. In fact, most protest footage that ends up online today is captured via phones and posted to social platforms.

The risk you’re describing is about:

Data retention

Uploading to corporate servers

Government subpoenas

Facial recognition databases

Those exist independently of smart glasses.

If someone is concerned about surveillance at a protest, the safest approach is digital hygiene — not assuming glasses are uniquely dangerous while phones are somehow benign.

7. “AI companies are desperate for training data.”

Yes, companies want data. But:

Users can control upload settings.

Not all captured footage is automatically used for training.

Policies around AI training data are under intense regulatory scrutiny globally.

If the issue is AI training practices, that’s a broader regulatory debate — not something solved by opposing one wearable device.

The real divide here

You’re arguing from systemic distrust:

Corporations will exploit data.

Governments will abuse access.

New tech amplifies surveillance creep.

That’s a coherent worldview.

I’m arguing that:

The surveillance ecosystem already exists.

Smart glasses are incremental, not revolutionary.

Misuse is a behavioral and regulatory issue, not an inherent property of the device.

Civilian ownership doesn’t equal endorsement of state surveillance.

It’s reasonable to demand strong data governance and limits on law enforcement use. I support that.

But equating every civilian wearer with “mobile surveillance for AI bros and the government” assumes malicious intent and inevitability of abuse — and that’s a leap.

The conversation we probably should be having isn’t “ban smart glasses” — it’s:

What are the default upload settings?

What transparency exists around AI training?

What limits exist for government acquisition of consumer-captured data?

Should visible indicators be standardized across all wearable cameras?

That’s a policy conversation.

Calling individual users inherently suspicious because they wear a new form factor camera feels less like a privacy argument and more like a presumption of guilt.

highplainsdem

(61,087 posts)The people who use Meta glasses in public are so creepy

https://www.reddit.com/r/Cameras/comments/1l6v0ib/the_people_who_use_meta_glasses_in_public_are_so/

Are wearing AI-powered Smart Sunglasses actually the creepiest thing in tech right now?

https://www.reddit.com/r/technology/comments/1pkqpo5/are_wearing_aipowered_smart_sunglasses_actually/

Meta raybans are creepy as all fuck

https://www.reddit.com/r/SeriousConversation/comments/1n820wi/meta_raybans_are_creepy_as_all_fuck/

Meta Rayban glasses used to identify folks on the street within seconds. They're also becoming more popular within rave and concert events. Should these devices be banned from all dance music events?

https://www.reddit.com/r/aves/comments/1prnot0/meta_rayban_glasses_used_to_identify_folks_on_the/

I don't want to interact with someone who is wearing smart glasses

https://www.reddit.com/r/unpopularopinion/comments/1hq3kdb/i_dont_want_to_interact_with_someone_who_is/

I Can't Help Feeling Like a Creep Wearing Meta's New Gen 2 Glasses

https://www.reddit.com/r/technology/comments/1omgrb3/i_cant_help_feeling_like_a_creep_wearing_metas/

Smartglasses spark privacy fears as secret filming videos flood social media | Technology News

https://www.reddit.com/r/privacy/comments/1qv7kue/smartglasses_spark_privacy_fears_as_secret/

Gen Z pushes back against smart glasses and cameras over privacy fears

https://www.reddit.com/r/technology/comments/1n5qsr5/gen_z_pushes_back_against_smart_glasses_and/

Polybius

(21,689 posts)Reddit amplifies strong reactions. That’s what it does. If you search “smart glasses creepy,” you’ll obviously find posts calling them creepy. If you search “Ray-Ban Meta awesome” or “Ray-Ban Meta useful,” you’ll find plenty of people praising them for convenience, accessibility, hands-free video, music, calls, and travel use. Online forums skew toward outrage and hot takes — that’s not the same thing as broad societal consensus.

The product we’re talking about — Ray-Ban Meta Smart Glasses — is selling well, being reviewed positively by major tech outlets, and being used by regular people for normal, boring reasons. That matters more to me than emotionally charged thread titles.

You can curate a list of links calling anything creepy:

AirTags were called stalking tools.

Drones were called spy machines.

GoPros were called invasive.

Early camera phones were labeled pervert devices.

Unless you still agree with those early opinions?

Every time, early adopters got side-eyed. Every time, a vocal online minority framed the tech in worst-case terms. And every time, usage normalized once people realized most owners weren’t villains.

There are also counterarguments all over Reddit and elsewhere, with users pointing out the LED indicator, people explaining they use them for biking, walking, or accessibility, people preferring them over pulling out a phone constantly, and users saying they feel less awkward recording because the device signals clearly when it’s active.

You’re presenting Reddit discomfort as proof of inherent wrongdoing. That’s just social friction around new tech. Social friction doesn’t equal ethical collapse.

If someone personally doesn’t want to interact with a person wearing smart glasses, that’s their choice. But that’s a social preference — not a moral verdict.

The internet will always have threads calling something creepy. That alone doesn’t make the technology illegitimate, and it doesn’t make every person wearing it suspicious by default.

travelingthrulife

(4,907 posts)Our 'need' for instant gratification will kill us.

Crowman2009

(3,466 posts)....

muriel_volestrangler

(105,894 posts)"which had been specially designed to help people develop a relaxed attitude to danger. At the first hint of trouble they turn totally black and thus prevent you from seeing anything that might alarm you."

Douglas Adams (The Restaurant at the End of the Universe)

FemDemERA

(740 posts)Skittles

(170,451 posts)all complain about internet tracking being invasive ![]()

Red Mountain

(2,290 posts)HELL no!

Society will adjust. Signs on doors, peer pressure, I just don't know what else. For sure, the amount of data even small scale adaptation of devices like these will generate enormous need for storage and processing space......which will mean new power sources and new data centers. Kind of like an AI which came first....the chicken or the egg question.

highplainsdem

(61,087 posts)in 2014 because of privacy concerns, and a lot of businesses and facilities banned them.

https://en.wikipedia.org/wiki/Google_Glass

That was before genAI, and unfortunately we have a lot of AI-addicted people now.

Crowman2009

(3,466 posts)SheltieLover

(78,809 posts)SheltieLover

(78,809 posts)highplainsdem

(61,087 posts)especially considering how dangerous the Trump regime is.

But it has also surprised and disappointed me to see some Democrats and liberals apparently don't care that generative AI is based on worldwide theft of intellectual property, as long as any of the genAI tools resulting from that theft are in any way useful or amusing for them.

SheltieLover

(78,809 posts)Very disappointing, indeed.

As with all things, we all need to look at the potential for abuse.

Personally, I would never put some high tech gadget that close to my eyes. ![]()

![]()

yaesu

(9,163 posts)With lots of ass kissing.

hunter

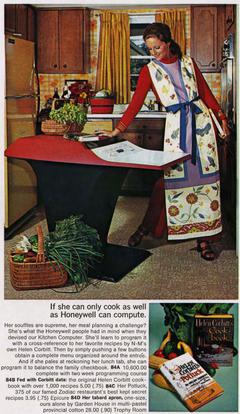

(40,520 posts)

If she can only cook as well as Honeywell can compute.

Her souffles are supreme, her meal planning a challenge? She's what the Honeywell people had in mind when they devised our Kitchen Computer. She'll learn to program it with a cross-reference to her favorite recipes by N-M's own Helen Corbitt. Then by simply pushing a few buttons obtain a complete menu organized around the entree. And if she pales at reckoning her lunch tabs, she can program it to balance the family checkbook

https://en.wikipedia.org/wiki/Honeywell_316

This Apple thing will be even better! If you leave it on all the time, uploading all your data to the cloud, your family will be able to make a simulation of your best self when you're dead.